Object Detection in Unmanned Aerial Vehicles (UAV)

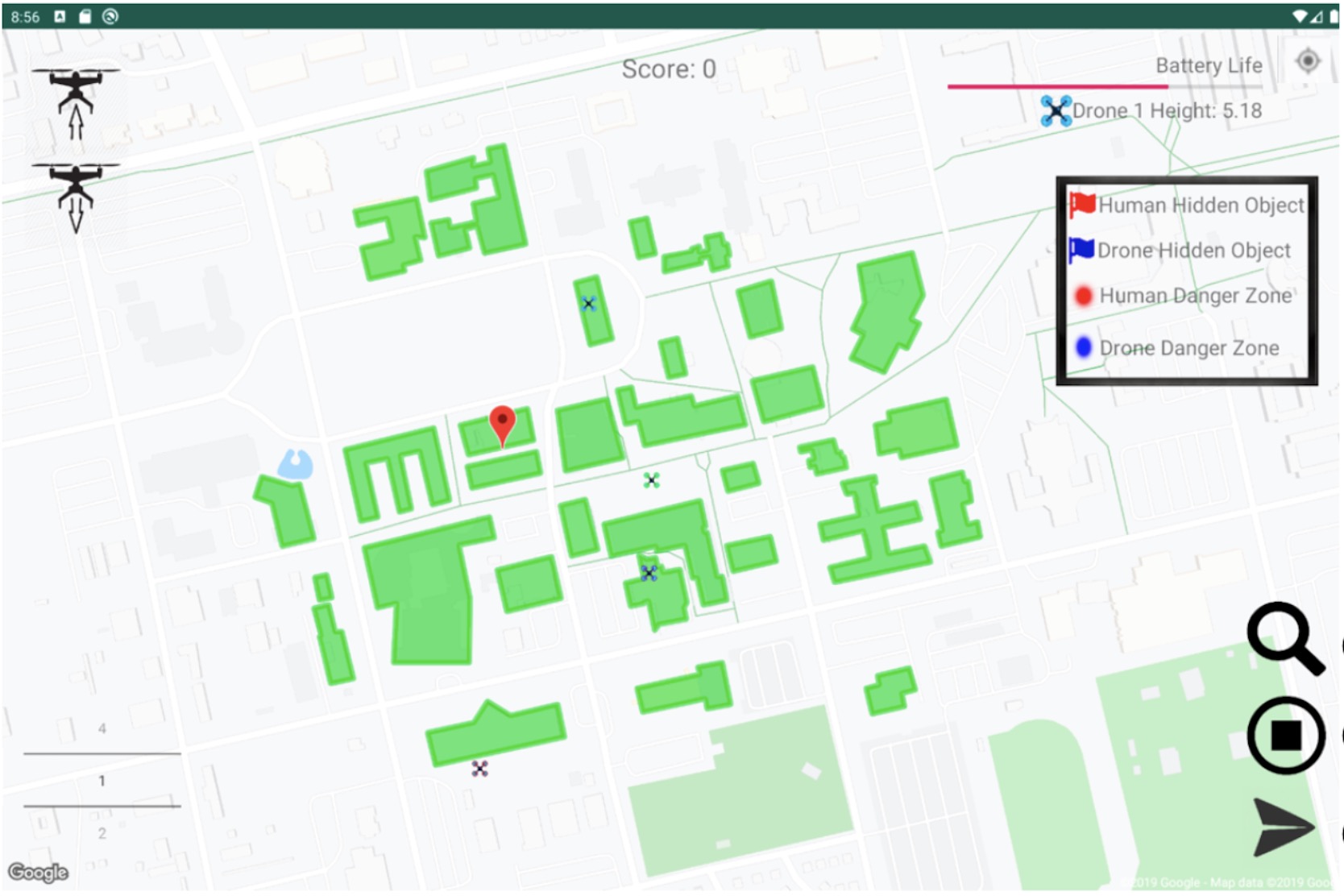

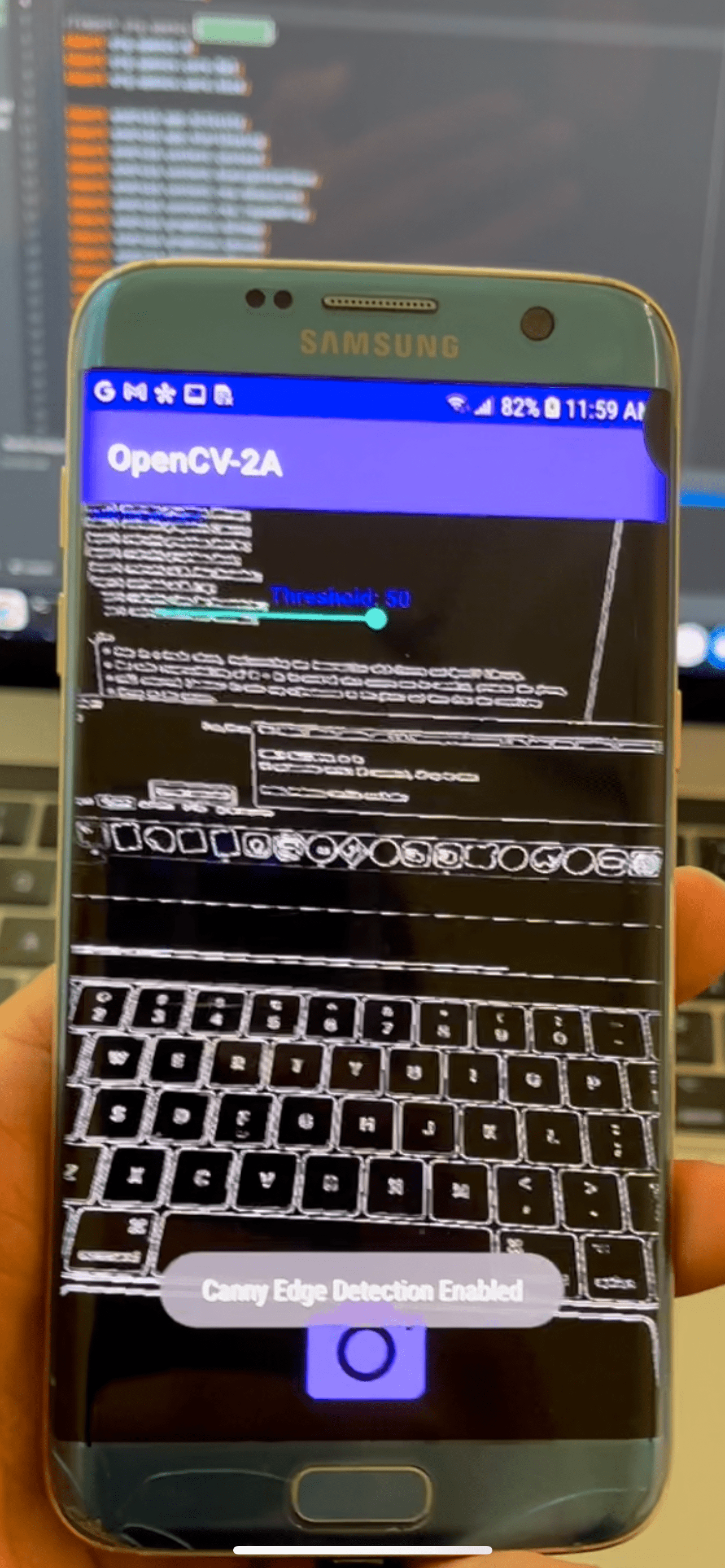

I developed a computer vision model designed specifically for high-altitude drone imagery using Google Colab for training. The dataset for this project was obtained in collaboration with Stanford University, with all imagery captured on their campus. This work resulted in a publication, one of only a few in this specialized area at the time. Technically, the model is built upon the RetinaNet architecture and uses a feature pyramid network to efficiently pinpoint and extract relevant features from the drone-captured data. Paired with my drone simulation software that accurately maps to real-world coordinates, this combination holds promise for various applications. It can be utilized for tasks such as crowd monitoring, search and rescue operations, and enhancing delivery services.